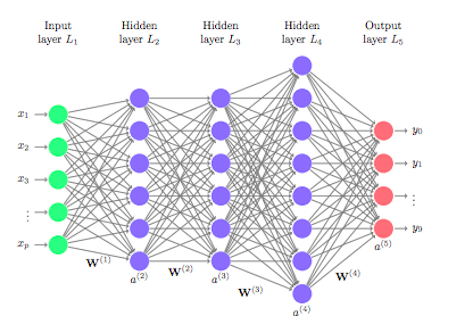

Deep learning is an artificial intelligence workspace that includes multiple layers of hidden neural networks. Thanks to the layers inside, it has a decision-making structure by creating a human-like thinking model. In this way, it plays an important role in solving many classification problems. Its biggest advantage is that it can achieve highly successful results in problems that are not based on any mathematical formula and cannot be expressed mathematically. In the literature, it can be used both in image processing and classification, as well as in natural language processing and sequence classification. Examples of the image classification field are cancer detection, object recognition, and emotion analysis with facial expressions. In the field of text processing, analysis of the language by examining a sequence, sentiment analysis from the text, detection of expression disorder, and classification of protein sequences can be given as examples.

All these processes can be described as first taking the data, preprocessing it according to the problem, and then making a prediction by giving it to the neural network layers. There are two basic neural network structures in the neural network layer here. These can be specified as Convolutional Neural Network (CNN) and Recurrent Neural Network (RNN). CNNs are more successful on images, and RNNs are better on long texts. After the preprocessing step, feature extraction of the data transmitted to the CNN or RNN layer is performed, and the data received from this layer is transmitted to the fully connected neural network layer (Dense), and classification is performed.

In the classification of transcription factors in bHLHDB, the RNN-based Long-Short Term Memory network was used.

References

image: https://towardsdatascience.com